Abstract

The Flex System CN4058S 8-port 10Gb Virtual Fabric Adapter and CN4052 2-port 10Gb Virtual Fabric Adapter are part of the VFA5 family of System x and Flex System adapters. These adapter supports up to four virtual NIC (vNIC) devices per port (for a total of 32 for the CN4058S and 8 for the CN4052), where each physical 10 GbE port can be divided into four virtual ports with flexible bandwidth allocation. These adapters also feature RDMA over Converged Ethernet (RoCE) capability, and support iSCSI, and FCoE protocols with the addition of a Features on Demand (FoD) license upgrade.

Watch the video walk-through of the adapters with David Watts and Tom Boucher

Introduction

The Flex System CN4058S 8-port 10Gb Virtual Fabric Adapter and CN4052 2-port 10Gb Virtual Fabric Adapter are part of the VFA5 family of System x and Flex System adapters. These adapter supports up to four virtual NIC (vNIC) devices per port (for a total of 32 for the CN4058S and 8 for the CN4052), where each physical 10 GbE port can be divided into four virtual ports with flexible bandwidth allocation. These adapters also feature RDMA over Converged Ethernet (RoCE) capability, and support iSCSI, and FCoE protocols with the addition of a Features on Demand (FoD) license upgrade.

With hardware protocol offloads for TCP/IP and FCoE, the CN4058S and CN4052 provides maximum bandwidth with minimum use of CPU resources and enables more VMs per server, which provides greater cost saving to optimize return on investment. With up to eight ports, the CN4058S in particular makes full use of the capabilities of all supported Ethernet switches in the Flex System portfolio.

Figure 1 shows the CN4058S 8-port 10Gb Virtual Fabric Adapter.

Figure 1. Flex System CN4058S 8-port 10Gb Virtual Fabric Adapter

Did you know?

This CN4058S is based on industry-standard PCIe 3.0 architecture and offers the flexibility to operate as a Virtual NIC Fabric Adapter or as a 8-port 10 Gb or 1 Gb Ethernet device. Because this adapter supports up to 32 virtual NICs on a single adapter, you see virtualization-related benefits, such as cost, power and cooling, and data center footprint by deploying less hardware. Servers, such as the new x240 M5 Compute Node, support up to two of these adapters for a total of 64 virtual NICs per system.

Part number information

Withdrawn: The adapters are withdrawn from marketing.

Table 1. Ordering part numbers and feature codes

| Option | Feature Code | Description |

| Adapters (withdrawn from marketing) | ||

| 94Y5160* | A4R6 | Flex System CN4058S 8-port 10Gb Virtual Fabric Adapter |

| 00JY800* | A5RP | Flex System CN4052 2-port 10Gb Virtual Fabric Adapter |

| License upgrades | ||

| 94Y5164 | A4R9 | Flex System CN4058S Virtual Fabric Adapter SW Upgrade (FoD) |

| 00JY804 | A5RV | Flex System CN4052 Virtual Fabric Adapter SW Upgrade (FoD) |

* Withdrawn from marketing

Features

The CN4058S 8-port 10Gb Virtual Fabric Adapter and CN4052 2-port 10Gb Virtual Fabric Adapter, which are part of the VFA5 family of System x and Flex System adapters, reduce cost by enabling a converged infrastructure and improves performance with powerful offload engines. The adapters have the following features and benefits:

- Multi-protocol support for 10 GbE

The adapters offers two (CN4052) or eight (CN4058S) 10 GbE connections and are cost- and performance-optimized for integrated and converged infrastructures. They offer a “triple play” of converged data, storage, and low latency RDMA networking on a common Ethernet fabric. The adapters provides customers with a flexible storage protocol option for running heterogeneous workloads on their increasingly converged infrastructures.

- Virtual NIC emulation

The Emulex VFA5 family supports three NIC virtualization modes, right out of the box: Virtual Fabric mode (vNIC1), switch independent mode (vNIC2), and Unified Fabric Port (UFP). With NIC virtualization, each of the two physical ports on the adapter can be logically configured to emulate up to four virtual NIC (vNIC) functions with user-definable bandwidth settings. Additionally, each physical port can simultaneously support a storage protocol (FCoE or iSCSI).

- Full hardware storage offloads

These adapters support an optional FCoE hardware offload engine, which is enabled via Lenovo Features on Demand (FoD). This offload engine accelerates storage protocol processing and delivers up to 1.5 million I/O operations per second (IOPS), which enables the server’s processing resources to focus on applications and improves the server’s performance.

- Power savings

When compared with previous generation Emulex VFA3 adapters, the Emulex VFA5 adapters can save up to 50 watts per server, reducing energy and cooling OPEX through improved storage offloads.

- Lenovo Features on Demand (FoD)

The adapters use FoD software activation technology. FoD enables the adapters to be initially deployed as a low-cost Ethernet NICs, and then later upgraded in the field to support FCoE or iSCSI hardware offload.

- Emulex Virtual Network Acceleration (VNeX) technology support

Emulex VNeX supports Microsoft supported network virtualization by using generic routing encapsulation (NVGRE) and VMware-supported virtual extensible LAN (VXLAN). These technologies create more logical LANs for traffic isolation that are needed by cloud architectures. Because these protocols increase the processing burden on the server’s processor, the adapters have an offload engine that is designed specifically for processing these tags. The resulting benefit is that cloud providers can use the benefits of VxLAN/NVGRE while not being penalized with a reduction in the server’s performance.

- Advanced RDMA capabilities

RDMA over Converged Ethernet (RoCE) delivers application and storage acceleration through faster I/O operations. It is best suited for environments, such as Microsoft SMB Direct in a Windows Server 2012 R2 environment. It can also support Linux NFS protocols.

With RoCE, the VFA5 adapter helps accelerate workloads in the following ways:

- Capability to deliver a 4x boost in small packet network performance vs. previous generation adapters, which is critical for transaction-heavy workloads

- Desktop-like experiences for VDI with up to 1.5 million FCoE or iSCSI IOPS

- Ability to scale Microsoft SQL Server, Exchange, and SharePoint using SMB Direct optimized with RoCE

- More VDI desktops/server due to up to 18% higher CPU effectiveness (the percentage of server CPU utilization for every 1 Mbps I/O throughput)

- Superior application performance for VMware hybrid cloud workloads due to up to 129% higher I/O throughput compared to adapters without offload

- Deploy faster and manage less when Virtual Fabric adapters (VFAs) and Host Bus Adapters (HBAs) are combined that are developed by Emulex

VFAs and HBAs that are developed by Emulex use the same installation and configuration process, which streamlines the effort to get your server running and saves you valuable time. They also use the same Fibre Channel drivers, which reduces time to qualify and manage storage connectivity. With Emulex's OneCommand Manager, you can manage VFAs and HBAs that are developed by Emulex through the data center from a single console.

Specifications

The Flex System CN4058S 8-port 10Gb Virtual Fabric Adapter features the following specifications:

- Eight-port 10 Gb Ethernet adapter

- Dual-ASIC controller using the Emulex XE104 design

- Two PCIe Express 3.0 x8 host interfaces (8 GT/s)

- MSI-X support

- Fabric Manager support

- Power consumption: 25 W maximum

The CN4052 2-port 10Gb Virtual Fabric Adapter has these specifications:

- Two-port 10 Gb Ethernet adapter

- Single-ASIC controller using the Emulex XE104 design

- One PCIe Express 3.0 x8 host interface (8 GT/s)

- MSI-X support

- Fabric Manager support

- Power consumption: 25 W maximum

Ethernet features

The following Ethernet features are included in both adapters:

- IPv4/IPv6 TCP and UDP checksum offload, Large Send Offload (LSO), Large Receive Offload, Receive Side Scaling (RSS), TCP Segmentation Offload (TSO)

- VLAN insertion and extraction

- Jumbo frames up to 9216 Bytes

- Priority Flow Control (PFC) for Ethernet traffic

- Interrupt coalescing

- Load balancing and failover support, including adapter fault tolerance (AFT), switch fault tolerance (SFT), adaptive load balancing (ALB), link aggregation, and IEEE 802.1AX

- 4 PCIe Physical Functions (PFs) per port

- 31 PCIe Virtual Functions (VFs) for NIC per port configurable

- Advance Error Reporting (AER)

- Supports D0, D3 (hot & cold) power management modes

- Completion Timeout (CTO)

- Function Level Reset (FLR)

- Network boot support:

- PXE Boot

- iSCSI Boot (with FoD upgrade)

- FCoE Boot (with FoD upgrade)

- Virtual Fabric support:

- Virtual Fabric mode (vNIC1)

- Switch Independent mode (vNIC2)

- Unified Fabric Port (UFP) support

- Emulex VNeX Technology full hardware offload support:

- Increases VM Density and decreases CPU power draw

- Microsoft Network Virtualization using Generic Routing Encapsulation (NVGRE)

- VMware Virtual Extensible LAN (VXLAN)

- VM mobility acceleration, which offloads header encapsulation from processor to network controller

- Remote Direct Memory Access over Converged Ethernet (RoCE) support:

- Accelerates application and storage I/O operations

- Supports Windows Server SMB Direct

- Is required to deliver low latency performance

- Single Root I/O Virtualization (SRIOV) support:

- Maximum of 128 Virtual Functions

- Minimizes VM I/O overhead and improves adapter performance

FCoE features (with FoD upgrade)

The following FCoE features (with FoD upgrade) are included:

- Hardware offloads of Ethernet TCP/IP

- ANSI T11 FC-BB-5 Support

- Programmable World Wide Name (WWN)

- Support for FIP and FCoE Ether Types

- Support for up to 255 NPIV Interfaces per port

- FCoE Initiator

- Common driver for Emulex Universal CNA and Fibre Channel HBAs

- Fabric Provided MAC Addressing (FPMA) support

- 1024 concurrent port logins (RPIs) per port

- 1024 active exchanges (XRIs) per port

iSCSI features (with FoD upgrade)

The following iSCSI features (with FoD upgrade) are included:

- Full iSCSI Protocol Offload

- Header, Data Digest (CRC), and PDU

- Direct data placement of SCSI data

- 2048 Offloaded iSCSI connections

- iSCSI initiator and concurrent initiator or target modes

- Multipath I/O

- OS-independent INT13 based iSCSIboot and iSCSI crash dump support

- RFC 4171 Internet Storage Name Service (iSNS)

Standards

The adapter supports the following IEEE standards:

- PCI Express base spec 2.0, PCI Bus Power Management Interface, rev. 1.2, Advanced Error Reporting (AER)

- 802.3-2008 10Gbase Ethernet port

- 802.1Q vLAN

- 802.3x Flow Control with pause Frames

- 802.1 Qbg Edge Virtual Bridging

- 802.1Qaz Enhanced transmission Selection (ETS) Data Center Bridging Capability (DCBX)

- 802.1Qbb Priority Flow Control

- 802.3ad link Aggregation/LACP

- 802.1ab Link Layer Discovery Protocol

- 802.3ae (SR Optics)

- 802.1AX (Link Aggregation)

- 802.3p (Priority of Service)

- 802.1Qau (Congestion Notification)

- IPV4 (RFQ 791)

- IPV6 (RFC 2460)

Modes of operation

The CN4058S and CN4052 support the following vNIC modes of operation and pNIC mode:

- Virtual Fabric Mode (also known as vNIC1 mode). This mode works only with any of the following switches that are installed in the chassis:

- Flex System Fabric CN4093 10Gb Converged Scalable Switch

- Flex System Fabric EN4093R 10Gb Scalable Switch

- Flex System Fabric EN4093 10Gb Scalable Switch

In this mode, the adapter communicates using DCBX with the switch module to obtain vNIC parameters. Also, a special tag within each data packet is added and later removed by the NIC and switch for each vNIC group to maintain separation of the virtual channels.In vNIC mode, each physical port is divided into four virtual ports, which provides a total of 32 (CN4058S) or 8 (CN4052) virtual NICs per adapter. The default bandwidth for each vNIC is 2.5 Gbps. Bandwidth for each vNIC can be configured at the switch 100 Mbps - 10 Gbps, up to a total of 10 Gbps per physical port. The vNICs can also be configured to have 0 bandwidth if you must allocate the available bandwidth to fewer than eight vNICs. In Virtual Fabric Mode, you can change the bandwidth allocations through the switch user interfaces without requiring a reboot of the server.

When storage protocols are enabled on the adapter (enabled with the appropriate FoD upgrade as listed in Table 1), 28 ports are Ethernet, and eight ports are iSCSI or FCoE.

- Switch Independent Mode (also known as vNIC2 mode), where the adapter works with the following switches:

- Cisco Nexus B22 Fabric Extender for Flex System

- Flex System EN4023 10Gb Scalable Switch

- Flex System Fabric CN4093 10Gb Converged Scalable Switch

- Flex System Fabric EN4093R 10Gb Scalable Switch

- Flex System Fabric EN4093 10Gb Scalable Switch

- Flex System Fabric SI4091 System Interconnect Module

- Flex System Fabric SI4093 System Interconnect Module

- Flex System EN4091 10Gb Ethernet Pass-thru and a top-of-rack (TOR) switch

Switch Independent Mode offers the same capabilities as Virtual Fabric Mode in terms of the number of vNICs and the bandwidth that each can be configured to include. However, Switch Independent Mode extends the existing customer VLANs to the virtual NIC interfaces. The IEEE 802.1Q VLAN tag is essential to the separation of the vNIC groups by the NIC adapter or driver and the switch. The VLAN tags are added to the packet by the applications or drivers at each end station rather than by the switch. - Unified Fabric Port (UFP) provides a feature-rich solution compared to the original vNIC Virtual Fabric mode. As with Virtual Fabric mode vNIC, UFP allows carving up a single 10 Gb port into four virtual NICs (called vPorts in UFP). UFP also has the following modes that are associated with it:

- Tunnel mode: Provides Q-in-Q mode, where the vPort is customer VLAN-independent (very similar to vNIC Virtual Fabric Dedicated Uplink Mode).

- Trunk mode: Provides a traditional 802.1Q trunk mode (multi-VLAN trunk link) to the virtual NIC (vPort) interface; that is. permits host side tagging.

- Access mode: Provides a traditional access mode (single untagged VLAN) to the virtual NIC (vPort) interface, which is similar to a physical port in access mode.

- FCoE mode: Provides FCoE functionality to the vPort.

- Auto-VLAN mode: Auto VLAN creation for Qbg and VMready environments.

Only one vPort (vPort 2) per physical port can be bound to FCoE. If FCoE is not wanted, vPort 2 can be configured for one of the other modes. UFP works with the following switches:- Flex System Fabric CN4093 10Gb Converged Scalable Switch

- Flex System Fabric EN4093R 10Gb Scalable Switch

- Flex System Fabric SI4093 System Interconnect Module

- In pNIC mode, the adapter can operate as a standard 10 Gbps or 1 Gbps 4-port Ethernet expansion card. When in pNIC mode, the adapter functions with all supported I/O modules.

In pNIC mode, the adapter with the FoD upgrade applied operates in traditional Converged Network Adapter (CNA) mode with eight ports (CN4058S) or two ports (CN4052) of Ethernet and eight ports (CN4058S) or two ports (CN4052) of iSCSI or FCoE available to the operating system.

Embedded 10Gb Virtual Fabric Adapter

Some models of the X6 compute nodes include one Embedded 4-port 10Gb Virtual Fabric controller (VFA), which is also known as LAN on Motherboard or LOM. This controller is built into the system board. The controller is Emulex XE104-based like the CN4058S 8-port 10Gb Virtual Fabric Adapter and has the same features.

Using the Fabric Connector, two 10Gb ports routed to I/O module bay 1 and two 10 Gb ports are routed to I/O module bay 2. A compatible I/O module must be installed in those I/O module bays.

The Embedded 10Gb VFA also supports iSCSI and FCoE using the Lenovo Features on Demand (FoD) license upgrade listed in the following table. Only one upgrade is needed per compute node.

Withdrawn: This FoD license upgrade is now withdrawn from marketing.

Table 2. Feature on Demand upgrade for FCoE and iSCSI support

| Part number | Feature code | Description |

| 00FM258* | A5TM | Flex System X6 Embedded 10Gb Virtual Fabric Upgrade |

* Withdrawn from marketing

Supported servers

The following table lists the Flex System compute nodes that support the adapters.

Table 3. Supported servers

| Description | Part number | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CN4058S 8-port 10Gb Virtual Fabric Adapter | 94Y5160 | N | N | N | N | Y | Y | N | N | N | Y* | Y |

| CN4058S Virtual Fabric Adapter Software Upgrade (FoD) | 94Y5164 | N | N | N | N | Y | Y | N | N | N | Y | Y |

| Flex System CN4052 2-port 10Gb Virtual Fabric Adapter | 00JY800 | N | N | N | N | Y | Y | N | N | N | Y | Y |

| Flex System CN4052 Virtual Fabric Adapter SW Upgrade (FoD) | 00JY804 | N | N | N | N | Y | Y | N | N | N | N | Y |

* The CN4058S 8-port 10Gb Virtual Fabric Adapter has two ASICs and is not supported in slots 3 and 4

For more information about the expansion cards that are supported by each blade server type, visit

http://www.lenovo.com/us/en/serverproven/

I/O adapter cards are installed in the slot in supported servers, such as the x240 M5, as highlighted in the following figure.

Figure 2. Location of the I/O adapter slots in the Flex System x240 M5 Compute Node

Supported I/O modules

These adapters can be installed in any I/O adapter slot of a supported Flex System compute node. One or two compatible 1 Gb or 10 Gb I/O modules must be installed in the corresponding I/O bays in the chassis. The following table lists the switches that are supported. When the adapter is connected to the 1 Gb switch, the adapter operates at 1 Gb speeds.

To maximize the number of adapter ports that are usable, you might also need to order switch upgrades to enable more ports as listed in the table. Alternatively, for CN4093, EN4093R, and SI4093 switches, you can use Flexible Port Mapping, which is a new feature of Networking OS 7.8 with which you can minimize the number of upgrades that are needed. For more information, see the Product Guides for the switches, which are available at this website:

http://lenovopress.com/flexsystem/iomodules

Note: Adapter ports 7 and 8 are reserved for future use. The chassis supports all eight ports, but there are no switches that are available that connect to these ports.

Table 4. I/O modules and upgrades for use with the CN4058S and CN4052 adapter

| Description | Part number | Port count (per pair of switches)* |

| Lenovo Flex System Fabric EN4093R 10Gb Scalable Switch + EN4093 10Gb Scalable Switch (Upgrade 1) |

00FM514 49Y4798 |

4 |

| Lenovo Flex System Fabric CN4093 10Gb Converged Scalable Switch + CN4093 10Gb Converged Scalable Switch (Upgrade 1) |

00FM510 49Y4798 |

4 |

| Lenovo Flex System SI4091 10Gb System Interconnect Module | 00FE327 | 2 |

| Lenovo Flex System Fabric SI4093 System Interconnect Module + SI4093 System Interconnect Module (Upgrade 1) |

00FM518 95Y3318 |

4 |

| CN4093 10Gb Converged Scalable Switch: + CN4093 10Gb Converged Scalable Switch (Upgrade 1) + CN4093 10Gb Converged Scalable Switch (Upgrade 2) |

00D5823 00D5845 00D5847 |

6 |

| EN4093R 10Gb Scalable Switch: + EN4093 10Gb Scalable Switch (Upgrade 1) + EN4093 10Gb Scalable Switch (Upgrade 2) |

95Y3309 49Y4798 88Y6037 |

6 |

| EN4093 10Gb Scalable Switch: + EN4093 10Gb Scalable Switch (Upgrade 1) + EN4093 10Gb Scalable Switch (Upgrade 2) |

49Y4270** 49Y4798 88Y6037 |

6 |

| Flex System EN4091 10Gb Ethernet Pass-thru | 88Y6043 | 2 |

| SI4093 System Interconnect Module: + SI4093 System Interconnect Module (Upgrade 1) + SI4093 System Interconnect Module (Upgrade 2) |

95Y3313 95Y3318 95Y3320 |

6 |

| EN2092 1Gb Ethernet Scalable Switch + EN2092 1Gb Ethernet Scalable Switch (Upgrade 1) |

49Y4294 90Y3562 |

4 |

| Cisco Nexus B22 Fabric Extender for Flex System | 94Y5350 | 2 |

| EN4023 10Gb Scalable Switch (Base switch has 24 port licenses; Upgrades 1 & 2 may be needed) |

94Y5212 | 6 |

* This column indicates the number of adapter ports that are active if indicated upgrades are installed.

** Withdrawn from marketing.

The following table shows the connections between adapters that are installed in the compute nodes to the switch bays in the chassis.

Note: The CN4058S is not supported in slots 3 and 4 of the x880 X6, x480 X6 and x280 X6.

Table 5. Adapter to I/O bay correspondence

| I/O adapter slot in the server |

Port on the adapter* |

Corresponding I/O module bay

in the chassis |

|||

| Bay 1 | Bay 2 | Bay 3 | Bay 4 | ||

| Slot 1 | Port 1 | Yes | No | No | No |

| Port 2 | No | Yes | No | No | |

| Port 3 | Yes | No | No | No | |

| Port 4 | No | Yes | No | No | |

| Port 5 | Yes | No | No | No | |

| Port 6 | No | Yes | No | No | |

| Port 7** | Yes | No | No | No | |

| Port 8** | No | Yes | No | No | |

| Slot 2 | Port 1 | No | No | Yes | No |

| Port 2 | No | No | No | Yes | |

| Port 3 | No | No | Yes | No | |

| Port 4 | No | No | No | Yes | |

| Port 5 | No | No | Yes | No | |

| Port 6 | No | No | No | Yes | |

| Port 7** | No | No | Yes** | No | |

| Port 8** | No | No | No | Yes** | |

* The use of adapter ports 3, 4, 5, and 6 require upgrades to the switches as described in Table 4. The EN4091 Pass-thru only supports ports 1 and 2 (and only when two Pass-thru modules are installed).

** Adapter ports 7 and 8 are reserved for future use. The chassis supports all eight ports, but there are no switches that are available that connect to these ports.

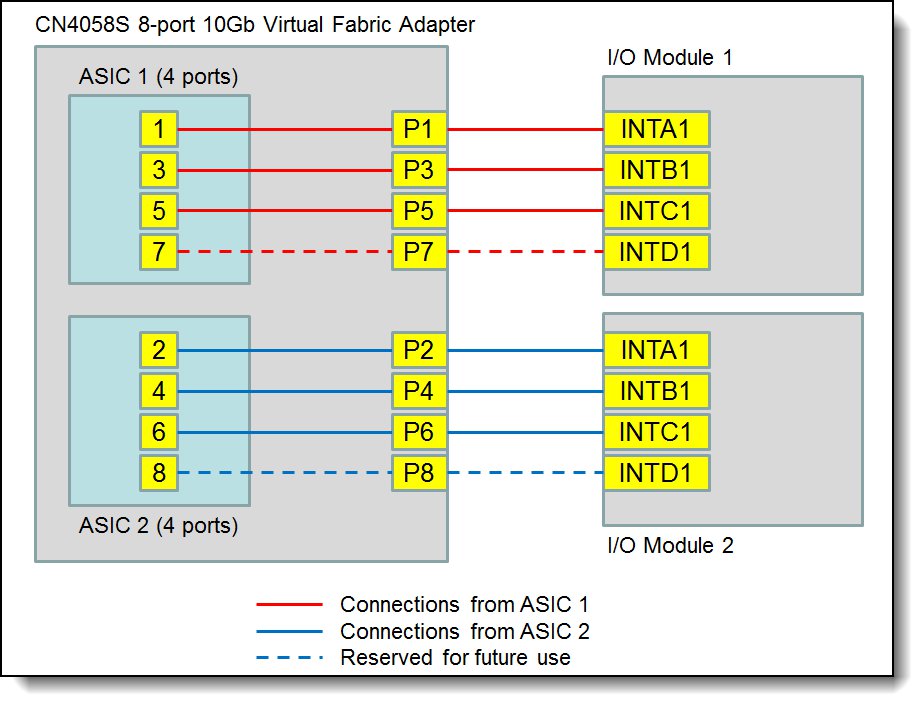

The following figure shows the internal layout of the CN4058S showing how the adapter ports are routed to the I/O module ports. Red lines indicate connections from ASIC 1 on the adapter and blue lines are the connections from ASIC 2. The dotted blue lines are reserved for future use when switches are available that support all 8 ports of the adapter.

Figure 3. Internal layout of the CN4058S adapter ports

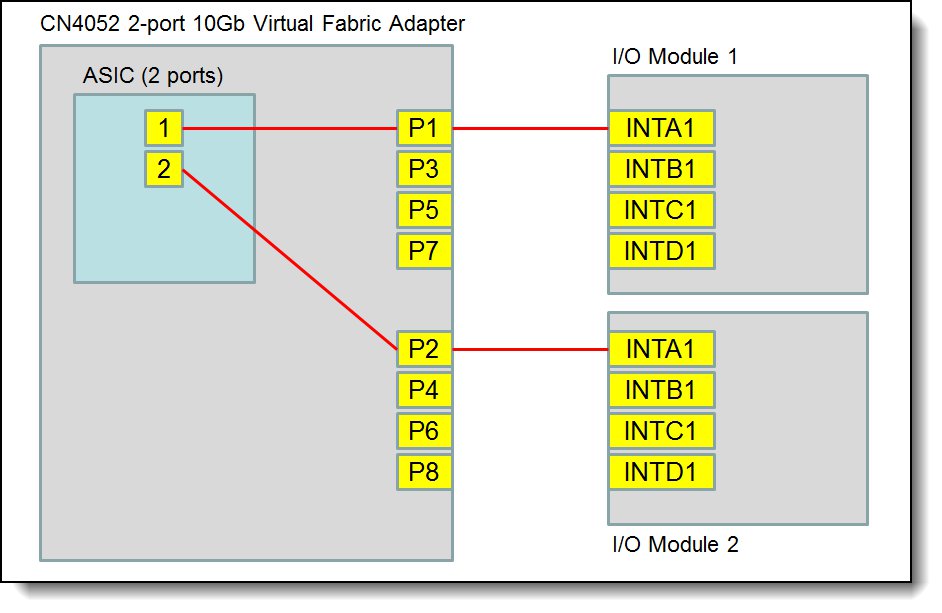

The following figure shows the internal layout of the CN4052 showing how the adapter ports are routed to the I/O module ports.

Figure 4. Internal layout of the CN4052 adapter ports

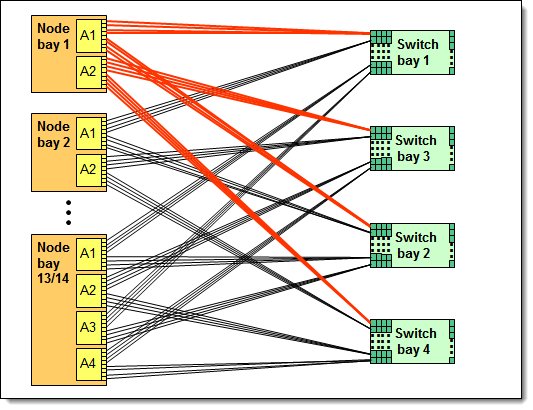

The connections between the CN4058S 8-port adapters that are installed in the compute nodes and the switch bays in the chassis are shown in the following figure. See Table 3 for specific server compatibility. See Table 4 for specific switch compatibility.

Figure 5. Logical layout of the interconnects between I/O adapters and I/O modules

Operating system support

The CN4058S and CN4052 support the following operating systems:

- Microsoft Windows Server 2012

- Microsoft Windows Server 2012 R2

- Microsoft Windows Server 2016

- Microsoft Windows Server 2019

- Microsoft Windows Server version 1709

- Red Hat Enterprise Linux 6 Server x64 Edition

- SUSE Linux Enterprise Server 11 for AMD64/EM64T

- SUSE Linux Enterprise Server 11 with Xen for AMD64/EM64T

- SUSE Linux Enterprise Server 12

- SUSE Linux Enterprise Server 12 with Xen

- SUSE Linux Enterprise Server 15

- SUSE Linux Enterprise Server 15 with Xen

- VMware vSphere 5.1 (ESXi)

- VMware vSphere Hypervisor (ESXi) 5.5

- VMware vSphere Hypervisor (ESXi) 6.5

- VMware vSphere Hypervisor (ESXi) 6.7

Operating system support may vary by server. For more information, see ServerProven at http://www.lenovo.com/us/en/serverproven/. Select the server in question, scroll down to the adapter, and click the + icon in that row to show the supported operating systems.

Warranty

There is a 1-year, customer-replaceable unit (CRU) limited warranty. When installed in a server, these adapters assume your system’s base warranty and any Lenovo warranty service upgrade purchased for the system.

Physical specifications

The adapter features the following dimensions and weight:

- Width: 100 mm (3.9 in.)

- Depth: 80 mm (3.1 in.)

- Weight: 13 g (0.3 lb)

The adapter features the following shipping dimensions and weight (approximate):

- Height: 58 mm (2.3 in.)

- Width: 229 mm (9.0 in.)

- Depth: 208 mm (8.2 in.)

- Weight: 0.4 kg (0.89 lb)

Regulatory compliance

The adapter conforms to the following regulatory standards:

- United States FCC 47 CFR Part 15, Subpart B, ANSI C63.4 (2003), Class A

- United States UL 60950-1, Second Edition

- IEC/EN 60950-1, Second Edition

- FCC - Verified to comply with Part 15 of the FCC Rules, Class A

- Canada ICES-003, issue 4, Class A

- UL/IEC 60950-1

- CSA C22.2 No. 60950-1-03

- Japan VCCI, Class A

- Australia/New Zealand AS/NZS CISPR 22:2006, Class A

- IEC 60950-1(CB Certificate and CB Test Report)

- Taiwan BSMI CNS13438, Class A

- Korea KN22, Class A; KN24

- Russia/GOST ME01, IEC-60950-1, GOST R 51318.22-99, GOST R 51318.24-99, GOST R 51317.3.2-2006, GOST R 51317.3.3-99

- IEC 60950-1 (CB Certificate and CB Test Report)

- CE Mark (EN55022 Class A, EN60950-1, EN55024, EN61000-3-2, EN61000-3-3)

- CISPR 22, Class A

Popular configurations

The adapters can be used in various configurations.

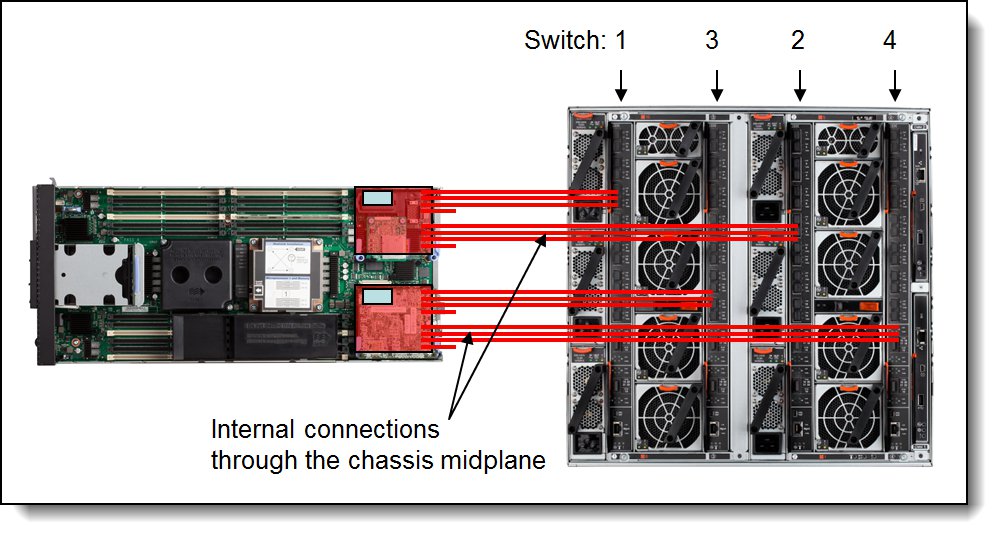

Ethernet configuration

The following figure shows CN4058S 8-port 10Gb Virtual Fabric Adapter installed in both slots of the x240 M5 Compute Node (a model without the Embedded 10Gb Virtual Fabric Adapter), which in turn is installed in the chassis. The chassis also has four Flex System Fabric CN4093 10Gb Converged Scalable Switches. Depending on the number of compute nodes that are installed and the number of external ports you want to enable, you might also need switch upgrades 1 and 2. Even with both of these upgrades, the CN4093 supports only six of the eight ports on each adapter.

Figure 6. Example configuration

The following table lists the parts that are used in the configuration.

Table 6. Components that are used when the adapter is connected to the 10 GbE switches

| Part number/ machine type |

Description | Quantity |

| Varies | Supported compute node | 1 to 14 |

| 94Y5160 | CN4058S 8-port 10Gb Virtual Fabric Adapter | 1 or 2 per server |

| 8721-A1x | Flex System Enterprise Chassis | 1 |

| 00D5823 | Flex System Fabric CN4093 10Gb Converged Scalable Switch | 4 |

| 00D5845 | Flex System Fabric CN4093 Converged Scalable Switch (Upgrade 1) | Optional with flexible port mapping |

| 00D5847 | Flex System Fabric CN4093 Converged Scalable Switch (Upgrade 2) | Optional with flexible port mapping |

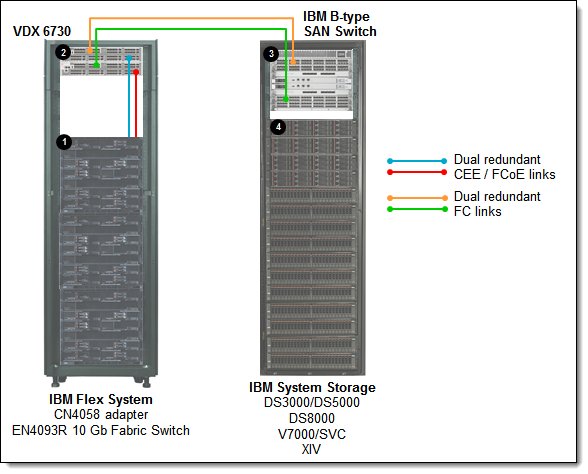

FCoE configuration using a Brocade SAN

The CN4058S adapter can be used with the EN4093R 10Gb Scalable Switch as Data Center Bridging (DCB) switches that can transport FCoE frames by using FCoE Initialization Protocol (FIP) snooping. The encapsulated FCoE packets are sent to the Brocade VDX 6730 Fibre Channel Forwarder (FCF), which is functioning as an aggregation switch and an FCoE gateway, as shown in the following figure.

s

s

Figure 7. FCoE solution that uses the EN4093R as an FCoE transit switch with the Brocade VDX 6730 as an FCF

The solution components that are used in the scenario as shown in the figure are listed in the following table.

Table 7. FCoE solution that uses the EN4093R as an FCoE transit switch with the Brocade VDX 6730 as an FCF

| Diagram reference |

Description | Part number |

Quantity |

| Flex System FCoE solution | |||

| Flex System CN4058S 8-port 10Gb Virtual Fabric Adapter | 94Y5160 | 1 per server | |

| Flex System Fabric EN4093R 10Gb Scalable Switch | 95Y3309 | 2 per chassis | |

| Flex System Fabric EN4093 10Gb Scalable Switch (Upgrade 1) | 49Y4798 | Optional with FPM | |

| Flex System Fabric EN4093 10Gb Scalable Switch (Upgrade 2) | 88Y6037 | Optional with FPM | |

| Brocade VDX 6730 Converged Switch | |||

| B-type or Brocade SAN fabric | |||

| IBM FC disk controllers | |||

| IBM DS3000 / DS5000 | |||

| IBM DS8000 | |||

| IBM Storwize V7000 / SAN Volume Controller | |||

| IBM XIV | |||

For a full listing of supported FCoE and iSCSI configurations, see the System Storage Interoperation Center (SSIC), which is available at this website:

http://ibm.com/systems/support/storage/ssic

Related product families

Product families related to this document are the following:

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

Flex System

ServerProven®

System x®

VMready®

The following terms are trademarks of other companies:

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Microsoft®, SQL Server®, SharePoint®, Windows Server®, and Windows® are trademarks of Microsoft Corporation in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.