Abstract

The Mellanox ConnectX-3 Mezz FDR 2-Port InfiniBand Adapter delivers low latency and high bandwidth for performance-driven server clustering applications in enterprise data centers, high-performance computing (HPC), and embedded environments. The adapters are designed to operate at InfiniBand FDR speeds (56 Gbps or 14 Gbps per lane).

This product guide provides essential presales information to understand the Mellanox adapters and their key features, specifications and compatibility. This guide is intended for technical specialists, sales specialists, sales engineers, IT architects, and other IT professionals who want to learn more about the adapters and consider their use in IT solutions.

Withdrawn from marketing: ThinkSystem Mellanox ConnectX-3 Mezz FDR 2-Port InfiniBand Adapter is now withdrawn from marketing.

Introduction

The Mellanox ConnectX-3 Mezz FDR 2-Port InfiniBand Adapter delivers low latency and high bandwidth for performance-driven server clustering applications in enterprise data centers, high-performance computing (HPC), and embedded environments. The adapter is designed to operate at InfiniBand FDR speeds (56 Gbps or 14 Gbps per lane).

Clustered databases, parallelized applications, transactional services, and high-performance embedded I/O applications potentially achieve significant performance improvements, helping to reduce completion time and lower the cost per operation. These Mellanox ConnectX-3 adapters simplify network deployment by consolidating clustering, communications, and management I/O, and helps provide enhanced performance in virtualized server environments.

Note: The Flex System IB6132 2-port FDR InfiniBand Adapter is withdrawn from marketing.

Figure 1 shows the adapter.

Figure 1. Mellanox ConnectX-3 Mezz FDR 2-Port InfiniBand Adapter

Did you know?

Mellanox InfiniBand adapters deliver industry-leading bandwidth with ultra-low, sub-microsecond latency for performance-driven server clustering applications. Combined with the IB6131 InfiniBand Switch, your organization can achieve efficient computing by off-loading from the CPU protocol processing and data movement overhead, such as Remote Direct Memory Access (RDMA) and Send/Receive semantics, allowing more processor power for the application. Advanced acceleration technology enables more than 90M Message Passing Interface (MPI) messages per second, making it a highly scalable adapter delivering cluster efficiency and scalability to tens-of-thousands of nodes.

Part number information

The following tables shows the part numbers to order the adapters.

Withdrawn: The adapters described in this product guide are now withdrawn from marketing.

| Part number | Feature code | Description |

|---|---|---|

| 90Y3454 | A1QZ | Flex System IB6132 2-port FDR InfiniBand Adapter |

| 7ZT7A00508 | AUKV | ThinkSystem Mellanox ConnectX-3 Mezz FDR 2-Port InfiniBand Adapter |

The part numbers includes the following items:

- One adapter for use in ThinkSystem and Flex System compute nodes

- Documentation

Features

The adapter has the following features:

Performance

Mellanox ConnectX-3 technology provide a high level of throughput performance for all network environments by removing I/O bottlenecks in mainstream servers that are limiting application performance. Servers can achieve up to 56 Gbps transmit and receive bandwidth. Hardware-based InfiniBand transport and IP over InfiniBand (IPoIB) stateless off-load engines handle the segmentation, reassembly, and checksum calculations that otherwise burden the host processor.

RDMA over the InfiniBand fabric further accelerates application run time while reducing CPU utilization. RDMA allows very high-volume transaction-intensive applications typical of HPC and financial market firms, as well as other industries where speed of data delivery is paramount to take advantage. With the ConnectX-3-based adapter, highly compute-intensive tasks running on hundreds or thousands of multiprocessor nodes, such as climate research, molecular modeling, and physical simulations, can share data and synchronize faster, resulting in shorter run times. High-frequency transaction applications are able to access trading information more quickly, making sure that the trading servers are able to respond first to any new market data and market inefficiencies, while the higher throughput enables higher volume trading, maximizing liquidity and profitability.

In data mining or web crawl applications, RDMA provides the needed boost in performance to search faster by solving the network latency bottleneck associated with I/O cards and the corresponding transport technology in the cloud. Various other applications that benefit from RDMA with ConnectX-3 include Web 2.0 (Content Delivery Network), business intelligence, database transactions, and various Cloud computing applications. Mellanox ConnectX-3's low power consumption provides clients with high bandwidth and low latency at the lowest cost of ownership.

Quality of service

Resource allocation per application or per VM is provided by the advanced quality of service (QoS) supported by ConnectX-3. Service levels for multiple traffic types can be assigned on a per flow basis, allowing system administrators to prioritize traffic by application, virtual machine, or protocol. This powerful combination of QoS and prioritization provides the ultimate fine-grained control of traffic, ensuring that applications run smoothly in today’s complex environments.

Specifications

The adapters have the following specifications:

- Based on Mellanox ConnectX-3 technology

- InfiniBand Architecture Specification v1.2.1 compliant

- Supported InfiniBand speeds (auto-negotiated):

- 1X/2X/4X Single Data Rate (SDR) (2.5 Gb/s per lane)

- Double Data Rate (DDR) (5 Gb/s per lane)

- Quad Data Rate (QDR) (10 Gb/s per lane)

- FDR10 (40 Gb/s, 10 Gb/s per lane)

- Fourteen Data Rate (FDR) (56 Gb/s, 14 Gb/s per lane)

- PCI Express 3.0 x8 host-interface up to 8 GT/s bandwidth

- CPU off-load of transport operations

- CORE-Direct application off-load

- GPUDirect application off-load

- End-to-end QoS and congestion control

- Hardware-based I/O virtualization

- Transmission Control Protocol (TCP)/User Datagram Protocol (UDP)/Internet Protocol (IP) stateless off-load

- Ethernet encapsulation (EoIB)

- RoHS-6 compliant

- Power consumption: Typical: 9 W, maximum 11 W

Note: To operate at InfiniBand FDR speeds, the Flex System IB6131 InfiniBand Switch requires the FDR Update license, 90Y3462.

Supported servers

The following table lists the ThinkSystem and Flex System compute nodes that support the adapters.

| Part number |

Description |

x240 (8737, E5-2600 v2)

|

x240 (7162)

|

x240 M5 (9532, E5-2600 v3)

|

x240 M5 (9532, E5-2600 v4)

|

x440 (7167)

|

x880/x480/x280 X6 (7903)

|

x280/x480/x880 X6 (7196)

|

SN550 (7X16)

|

SN850 (7X15)

|

|---|---|---|---|---|---|---|---|---|---|---|

| 90Y3454 | Flex System IB6132 2-port FDR Infiniband Adapter | N | Y | Y | Y | N | N | Y | N | N |

| 7ZT7A00508 | ThinkSystem Mellanox ConnectX-3 Mezz FDR 2-Port InfiniBand Adapter | N | N | N | N | N | N | N | Y | Y |

Supported I/O modules

The adapters support the I/O module listed in the following table. One or two compatible switches must be installed in the corresponding I/O bays in the chassis. Installing two switches means that both ports of the adapter are enabled. The adapter has a total bandwidth of 56 Gbps. That total bandwidth is shared when two switches are installed. To operate at FDR speeds (56 Gbps), you must also install the FDR Upgrade license, 90Y3462.

Table 3. I/O modules supported with the FDR InfiniBand adapters

| Description | Part number |

| Flex System IB6131 InfiniBand Switch | 90Y3450 |

| Flex System IB6131 InfiniBand Switch (FDR Upgrade)* | 90Y3462 |

* This license allows the switch to support FDR speeds.

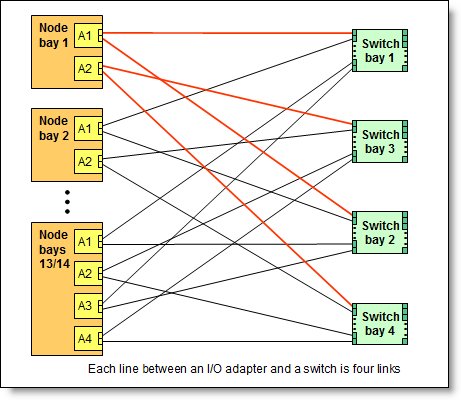

The following table shows the connections between adapters installed in the compute nodes to the switch bays in the chassis.

Table 4. Adapter to I/O bay correspondence

| I/O adapter slot in the server | Port on the adapter | Corresponding I/O module bay in the chassis |

| Slot 1 | Port 1 | Module bay 1 |

| Port 2 | Module bay 2 | |

| Slot 2 | Port 1 | Module bay 3 |

| Port 2 | Module bay 4 |

The connections between the adapters installed in the compute nodes to the switch bays in the chassis are shown diagrammatically in the following figure.

Figure 2. Logical layout of the interconnects between I/O adapters and I/O modules

Supported operating systems

The adapters support the operating systems listed in the following tables.

| Operating systems | SN550 (Xeon Gen 2) |

SN850 (Xeon Gen 2) |

SN550 (Xeon Gen 1) |

SN850 (Xeon Gen 1) |

|---|---|---|---|---|

| Microsoft Windows Server 2012 R2 | N | N | Y | Y |

| Microsoft Windows Server 2016 | Y | Y | Y | Y |

| Microsoft Windows Server 2019 | Y | Y | Y | Y |

| Microsoft Windows Server version 1709 | N | N | Y | Y |

| Microsoft Windows Server version 1803 | N | N | Y | N |

| Red Hat Enterprise Linux 6.10 | N | N | Y | Y |

| Red Hat Enterprise Linux 6.9 | N | N | Y | Y |

| Red Hat Enterprise Linux 7.3 | N | N | Y | Y |

| Red Hat Enterprise Linux 7.4 | N | N | Y | Y |

| Red Hat Enterprise Linux 7.5 | N | N | Y | Y |

| Red Hat Enterprise Linux 7.6 | Y | Y | Y | Y |

| Red Hat Enterprise Linux 7.7 | Y | Y | Y | Y |

| Red Hat Enterprise Linux 7.8 | Y | Y | Y | Y |

| Red Hat Enterprise Linux 7.9 | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.0 | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.1 | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.2 | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.3 | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.4 | Y | Y | Y | Y |

| Red Hat Enterprise Linux 8.5 | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 11 SP4 | N | N | Y | Y |

| SUSE Linux Enterprise Server 12 SP2 | N | N | Y | Y |

| SUSE Linux Enterprise Server 12 SP3 | N | N | Y | Y |

| SUSE Linux Enterprise Server 12 SP4 | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 12 SP5 | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 15 | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 15 SP1 | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 15 SP2 | Y | Y | Y | Y |

| SUSE Linux Enterprise Server 15 SP3 | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.0 U3 | N | N | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.5 | N | N | N | Y |

| VMware vSphere Hypervisor (ESXi) 6.5 U1 | N | N | N | Y |

| VMware vSphere Hypervisor (ESXi) 6.5 U2 | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.5 U3 | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.7 | N | N | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.7 U1 | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.7 U2 | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 6.7 U3 | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 7.0 | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 7.0 U1 | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 7.0 U2 | Y | Y | Y | Y |

| VMware vSphere Hypervisor (ESXi) 7.0 U3 | Y | Y | Y | Y |

| Operating systems | x220 (7906) |

x240 (8737, E5 v2) |

x240 (7162) |

x240 (8737, E5 v1) |

x240 M5 (9532) |

x280/x480/x880 X6 (7196) |

x280/x480/x880 X6 (7903) |

x440 (7917) |

|---|---|---|---|---|---|---|---|---|

| Microsoft Windows Server 2008 R2 | Y 1 | Y | N | Y 1 | N | N | Y | Y |

| Microsoft Windows Server 2008, Datacenter x64 Edition | Y 1 | N | N | Y 1 | N | N | N | Y |

| Microsoft Windows Server 2008, Enterprise x64 Edition | Y 1 | N | N | Y 1 | N | N | N | Y |

| Microsoft Windows Server 2008, Standard x64 Edition | Y 1 | N | N | Y 1 | N | N | N | Y |

| Microsoft Windows Server 2008, Web x64 Edition | Y 1 | N | N | Y 1 | N | N | N | Y |

| Microsoft Windows Server 2012 | Y | N | N | Y | N | N | N | Y |

| Microsoft Windows Server 2012 R2 | Y | Y | Y | Y | Y | Y | Y | Y |

| Microsoft Windows Server 2016 | N | Y | N | Y | Y | N | Y | N |

| Microsoft Windows Server 2019 | N | N | N | N | Y | N | N | N |

| Microsoft Windows Server version 1709 | N | N | N | N | Y | Y | N | N |

| Red Hat Enterprise Linux 5 Server with Xen x64 Edition | N | N | N | Y | N | N | N | N |

| Red Hat Enterprise Linux 5 Server x64 Edition | Y 1 | Y | N | Y 1 | N | N | N | Y |

| SUSE Linux Enterprise Server 10 for AMD64/EM64T | Y 1 | N | N | Y 1 | N | N | N | Y |

1 81Y8983-7906 limits CX3 adapters to PCIe generation 2 performance.

Regulatory compliance

The adapters conform to the following standards:

- United States FCC 47 CFR Part 15, Subpart B, ANSI C63.4 (2003), Class A

- United States UL 60950-1, Second Edition

- IEC/EN 60950-1, Second Edition

- FCC - Verified to comply with Part 15 of the FCC Rules, Class A

- Canada ICES-003, issue 4, Class A

- UL/IEC 60950-1

- CSA C22.2 No. 60950-1-03

- Japan VCCI, Class A

- Australia/New Zealand AS/NZS CISPR 22:2006, Class A

- IEC 60950-1(CB Certificate and CB Test Report)

- Taiwan BSMI CNS13438, Class A

- Korea KN22, Class A; KN24

- Russia/GOST ME01, IEC-60950-1, GOST R 51318.22-99, GOST R 51318.24-99, GOST R 51317.3.2-2006, GOST R 51317.3.3-99

- IEC 60950-1 (CB Certificate and CB Test Report)

- CE Mark (EN55022 Class A, EN60950-1, EN55024, EN61000-3-2, and EN61000-3-3)

- CISPR 22, Class A

Physical specifications

The dimensions and weight of the adapter are as follows:

- Width: 100 mm (3.9 inches)

- Depth: 80 mm (3.1 inches)

- Weight: 13 g (0.3 lb)

Shipping dimensions and weight (approximate):

- Height: 58 mm (2.3 in)

- Width: 229 mm (9.0 in)

- Depth: 208 mm (8.2 in)

- Weight: 0.4 kg (0.89 lb)

Related product families

Product families related to this document are the following:

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

Flex System

ServerProven®

ThinkSystem®

The following terms are trademarks of other companies:

Xeon® is a trademark of Intel Corporation or its subsidiaries.

Linux® is the trademark of Linus Torvalds in the U.S. and other countries.

Microsoft®, Windows Server®, and Windows® are trademarks of Microsoft Corporation in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.